Some other aspects of the complexity theory I found interesting:

Even if the agents comprising the population of the system are complicated and heterogeneous (just as people in a learning network are), this variability tends to “average out”; while the agents may be complicated, the objects can be modeled as being homogenous as a first approximation. While it goes without saying that humans are extremely complicated as a species, I don’t quite agree that the objects we create can be modeled simply. Some of the artifacts that a learning network can generate are extremely complex. However, I have a sense that the objects will be homogenous in a reductionist way.

In the first part of my post on The Complexity Of Learning, I mentioned competition as an element that is inherent to complexity in networks. Due to competition, the population of objects will often become polarized into two opposing groups. From my experience I have seen this happen in workplaces, another example that immediately comes to mind is bears and bulls in financial markets. Competition acts as the element that stabilizes the system. Without competition we would probably see wild fluctuations in the behavior of the system. Learning networks as I mentioned before definitely compete for resources, but in larger workplaces I have seen two distinct camps, or rather schools of thought and these tend to have divergent views of the system.

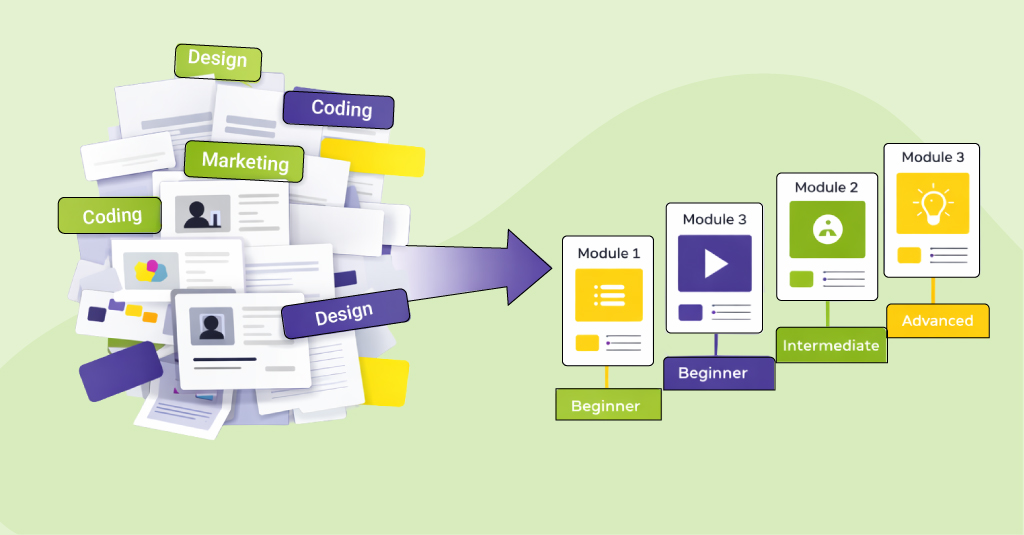

The fact that It’s sometimes possible to steer the behavior of a system by manipulating a subset of the system’s objects is quite interesting and may prove to be very important when attempting to understand learning networks. In my view this essentially proposes that agents can take measures to alter the state of the system by modifying objects. When thought of from a learning perspective, quite simply the objects are the pieces of learning content, tools, and ‘things’ (because they take varied form). A group of agents could manipulate the behavior of the network in ways to promote learning, essentially learning designers would then be manipulating network objects such that they promote learning. As a side note, I’m not saying this group of agents would be the ‘training department’ or ‘L&D’, for this assumption about learning networks to work, the agents would need to be influential and well-connected (in a network sense).

It’s interesting that learning networks are very strong in their structure; taking away agents and objects from the network only causes temporary damage. The networks gradually reconfigure to co-opt new agents and objects into the system. In the corporate workplace we uproot individuals and transfer them from workplace to workplace, from company to company, I’d hazard a guess that this produces a negative effect in the short-term; until the individual reconfigures to include agents and objects that are from the new environment. Given the state of information technology and telecommunications, these days it’s possible for the network to suffer minimum damage. While the ‘locale’ changes, and network distances change between agents, the system still remains robust.

The overall behavior of a system, and the ability of agents in the system obtain resources is directly proportionate to the amount of available resources and the level of connectivity (network structure) between agents. When resources are only moderate, adding a small amount of connectivity widens the disparity between successful and unsuccessful agents, whereas adding a high level of connectivity reduces this disparity. By contrast, when resources are plentiful, adding a small amount of connectivity is sufficient to increase the average success rate and enable most agents to be successful. So in a learning network, it is not just about resources but also about the connectivity between agents. This may be a problem in workplace learning networks, especially ones where resources are aplenty, but connectivity between agents is not.

Over the course of my reading, I’ve discovered that behavioral outcomes in complex systems tend to follow a power law distribution, with smaller events being most common (as is expected), but with extreme events also occurring more often than the distribution might suggest (unexpected). I’m not quite sure about this and must give it more thought, but offhand I equate behavioral outcomes as the result of learning transactions occurring in the network. So while there are continuous and numerous (seemingly insignificant) learning exchanges between agents, there are some large-scale exchanges on occasions that would seem like major learning events.

The science of complexity offers a different type of lens to view learning, one that is apart from cog-psych lens designers so often wear. There is much to learn, even more so in an increasingly connected world.