If we assume that the need is identified by analysis, then true impact comes from two things: design and measurement. Once we’ve determined the need, we should know what measure inadequate, and what level is it should be. Then we should design our solutions to change the resulting measurement. Then we test to see if we’re achieving it.

Design

The necessary goal is to align our design with the intended outcome. We know that there can be multiple reasons why performance isn’t as desired, so we need to ensure that we’re addressing all the reasons, and in ways that align with how we think, work, and learn.

The necessary goal is to align our design with the intended outcome. We know that there can be multiple reasons why performance isn’t as desired, so we need to ensure that we’re addressing all the reasons, and in ways that align with how we think, work, and learn.

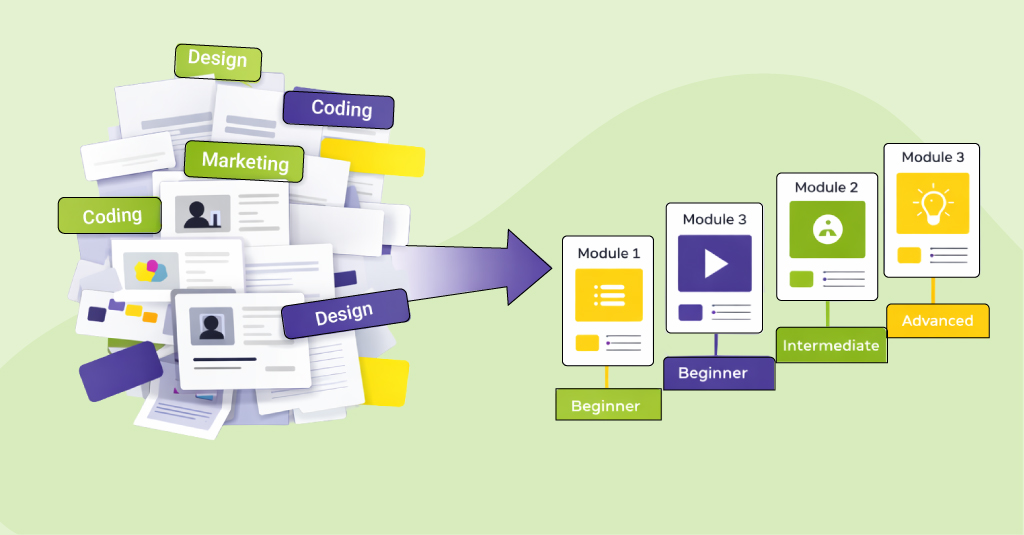

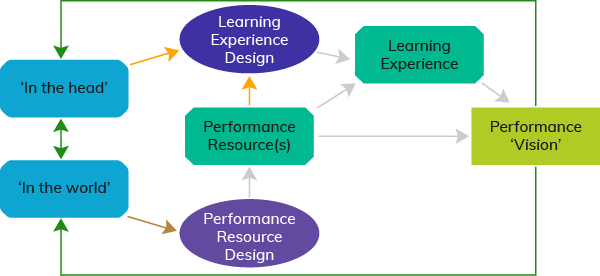

Our analysis may reveal multiple drivers of the problems. Incentives may not be aligned. People may not be receiving the correct messages, such as when supervisors say things like “forget what you learned in training, this is how we really do it”. There may be limitations or obstacles in the environment. There may not be the right tools or resources, or awareness of or access to them may be missing. Finally, learners may not have the necessary skills. The latter is addressed through training, the tools may be knowledge tools that could be developed by L&D, and other ones may require working with other entities.

Ultimately, a vision of the final solution should be made and then acted upon. This should be done in alignment with what’s known about how we think and learn. In previous work, we’ve suggested working backward from that vision and implementing the other steps before developing the training so it can include the necessary changes in other areas. Together, with all elements aligned, the solution should accomplish the necessary change. Which is a matter of design, but then we need to see if our design has hit the target.

Measurement

To determine whether a necessary change has occurred requires measurement. There are a variety of levels of measurement, but there should be some effort to ascertain whether the necessary behavior is now in place and yielding the desired results.

To determine whether a necessary change has occurred requires measurement. There are a variety of levels of measurement, but there should be some effort to ascertain whether the necessary behavior is now in place and yielding the desired results.

We have substantial experience with seeing initiatives that are well-designed but don’t ascend to the necessary output. Lessons from user experience, for instance, robustly have demonstrated the need for testing and iteration. Those lessons have transferred to newer design approaches like the Successive Approximation Model, a Lot Like Agile Management Approach, and Pebble in a Pond. All of these have moved from a waterfall to an iterative approach where initial drafts are tested and refined.

There’s testing for two reasons. One is formative, that is, to review and refine the design. The second is summative, to determine what we’ve achieved. The iterative approach gives us formative data to determine whether something needs to be changed.

What prompts iteration is measurement. That is, we want to test our interim result and see if it’s good as is or needs tweaking. To make that determination, we need data. Data can tell us whether we’re having an impact, and if it’s sufficient. We want our measurements to align with our intentions, just as our design should.

Design targeted to achieve an outcome, and measurement to ascertain whether the outcome has been achieved is what is required to have impact. Of course, the choice of outcome will determine what impact can be achieved, but once we’ve identified a need, we should know what outcome, and what impact, must occur.

In summary, achieving impactful learning involves strategic design and meticulous measurement. Addressing various performance drivers, aligning incentives, and overcoming obstacles are crucial design considerations. An iterative approach, such as the Successive Approximation Model, ensures continuous refinement through testing. Accurate measurement, both formative and summative, is essential for assessing success. For a deeper understanding, download our eBook, Designing for Learning Impact: Strategies and Implementation. Explore comprehensive insights to enhance your learning initiatives.