Most enterprise supply chain functions already operate on predictive planning and demand forecasting systems. Forecasts update automatically. Dashboards flag exceptions. Reports are accessible across levels. The tools are mature.

Yet decision quality has not moved at the same pace. Planning discussions still lean on habits. Supervisors override recommendations without fully examining the data behind them. The constraint is no longer system access but uneven operations data skills. In several cases, supply chain analytics training emphasized tool usage, not interpretation discipline.

When system upgrades do not alter frontline judgment, the limitation is rarely technical. The more relevant question becomes what type of workforce capability influences real decision behavior.

How Predictive Planning and Demand Forecasting Are Changing Operations Roles

In many enterprise environments, demand forecasting systems now generate multiple scenario outputs before planners begin their review. What used to be a manual projection exercise has shifted into a task of comparison and judgment. Planners assess probability ranges.

Supervisors monitor exception dashboards that surface performance deviations early. The role expectation has moved from producing numbers to interpreting them, yet capability design has not always followed that shift.

Common patterns begin to surface:

- Probability ranges misunderstood or treated as fixed values

- Override decisions made without deeper testing

- Alert fatigue reducing trust in system signals

These behaviors are not system failures. They reflect uneven operations data skills within redesigned roles. As responsibilities evolve, interpretation discipline becomes the next pressure point, particularly in how dashboards are read and acted upon.

Why Dashboard Interpretation Gaps Persist in Logistics and Supply Chain Teams

Even when dashboards are embedded across logistics functions, consistent interpretation does not follow automatically. Data visibility has improved, but clarity in reading it remains uneven. Supervisors often review performance metrics without clearly separating leading signals from historical results, which influences how issues are prioritized in daily planning meetings. Aggregated metrics, while efficient for reporting, can compress local variability into stable averages and hide risk building at specific facilities or routes.

Where Interpretation Breaks Down in Practice

Escalations tend to be triggered by visible volume spikes rather than by early warning indicators. Discussions lean toward past performance instead of forward movement. Over time, these habits shape response patterns. Logistics upskilling therefore cannot focus only on tool familiarity.

If the data is only partially understood, routing and inventory decisions tend to follow habits more than evidence.

What Data-Driven Decision-Making in Logistics Actually Requires

In logistics environments, data-driven decision-making shows up in ordinary but high-impact choices. A routing change is not simply a map of adjustment; it reflects an assessment of delivery patterns, delay frequency, and capacity pressure. Inventory buffer adjustments require reviewing demand movement alongside supply consistency, not just last month’s numbers. Supplier variability, even when visible in reports, must be interpreted in the context of production schedules and customer commitments.

Where this becomes visible is in workflow stability. In one enterprise network, clearer performance visibility at the shift level reduced recurring escalations and improved retention metrics within six months. The system remained the same, but the way teams discussed and interpreted the data shifted. That shift depended on building a data literacy workforce capable of interpreting patterns, not just accessing them.

When daily decisions begin to rely on consistent data interpretation, the next question is not about tools but about how learning structures support that behavior across roles.

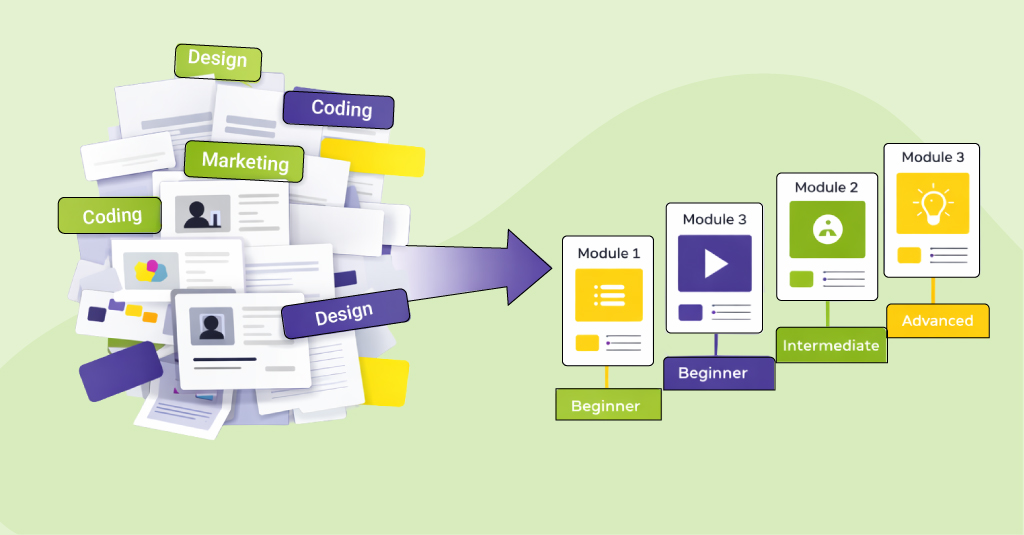

How to Blend Operational Training with Analytics Skills Without Disrupting Daily Work

In most enterprise settings, operational training and analytics capability are still designed separately. Teams attend classroom sessions or complete standard eLearning modules, then return to planning meetings were time pressure overrides reflection. If interpretation habits are expected to change, learning cannot sit outside workflow. It has to connect directly with the decisions supervisors and planners already make.

Embedding Analytics in the Flow of Work

That usually means tying learning directly to everyday decisions instead of pulling teams away from operations. Two design shifts tend to matter:

- Short scenario simulations based on actual routing or inventory situations

- Role-based data exercises built around current dashboards

When supply chain analytics training, delivered through contextual eLearning and practice-based formats, becomes part of the operations curriculum instead of a parallel track, application improves. The question then moves beyond course design and toward how the organization supports capability of ownership across functions.

What Upside Learning Delivers in Enterprise Supply Chain Capability Programs

Upside Learning works with enterprise organizations to design role-specific custom eLearning that aligns with operational decision-making. In supply chain environments, this translates into designing learning structures that reflect how operational decisions are actually made. Instead of separating analytics education from workflow, custom eLearning programs are built around real planning cycles, reporting dashboards, and decision checkpoints already in use.

Scenario-based simulations are drawn from routing adjustments and inventory reviews. Dashboard interpretation modules mirror existing reports. Reinforcement happens within daily work, which makes interpretation part of routine decisions rather than isolated training.

For organizations reassessing how analytics investments connect to workforce capability, Upside Learning works with enterprise teams to design learning that strengthens decision behavior at the operational layer.

To explore how this applies within your environment, connect with the Upside Learning team.

FAQs About Embedding Skills Assessment into Performance Workflows

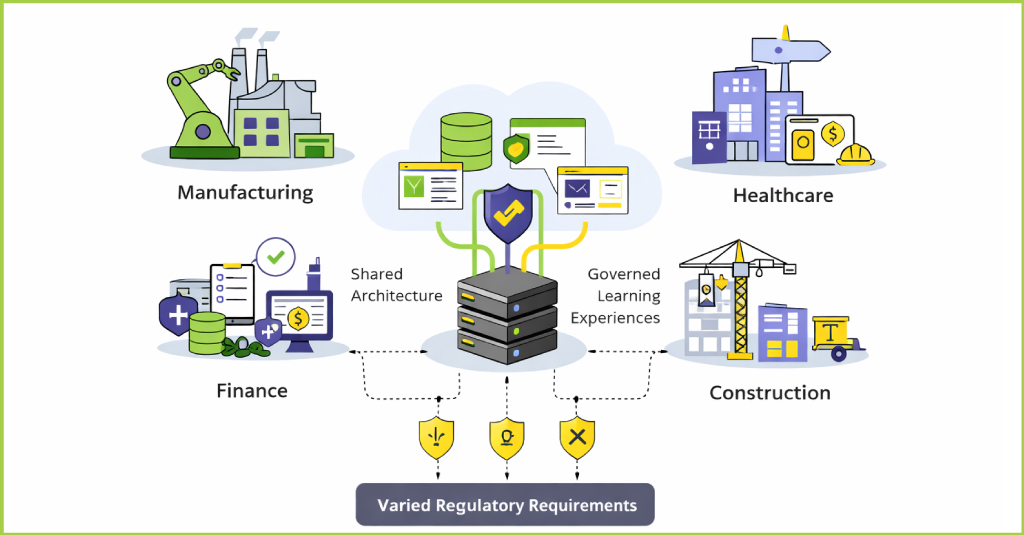

Workflow-embedded skills assessment measures how employees apply skills during real work tasks instead of only testing knowledge at the end of a course. Traditional LMS-based assessment validates recall or simulated responses. Embedded performance-based assessment captures live signals such as CRM entries, quality metrics, safety adherence, or manager-validated observations. This approach allows enterprise learning teams to measure actual capability rather than course completion.

Traditional skills assessment measures knowledge at a single point in time inside the LMS. It does not track whether skills are consistently applied in real workflows. When learning data is disconnected from operational metrics, organizations measure participation instead of capability.

Performance-based assessment improves ROI training analysis by linking skill application to measurable business outcomes. Instead of reporting course completions, organizations track changes in metrics such as defect rates, deal conversion, resolution time, or safety incidents. When applied skill signals align with operational improvements, ROI training conversations shift from assumptions to evidence. This strengthens executive confidence in enterprise learning investments.

Start with one high-impact role. Define three to five observable behaviors aligned with skills mapping. Use existing CRM, ERP, or operational data to capture performance signals. Combine system data with manager validation and compare baseline and post-training results over 60 to 90 days.

Skills mapping defines the required capabilities for each role. Embedded skills assessment validates whether those capabilities appear in real work. When operational evidence aligns with mapped skills, organizations gain accurate visibility into workforce capability.

Embedded assessment shows which skills improve productivity, quality, and revenue. It helps learning and development focus investment on measurable outcomes and strengthens executive confidence in employee training and development.

Ready to Move from Assessment to Measurable Capability?

Understanding the gap is one thing. Redesigning how capability is measured across systems is another.

If you are evaluating how to evolve your skills assessment approach, start with a structured diagnostic conversation. Identify where assessment currently sits, how performance-based assessment signals are defined, and what data already exists inside your operational platforms.

At Upside Learning, we work with enterprise teams to translate strategic intent into practical implementation plans. That includes pilot scoping, stakeholder alignment, skills mapping refinement, governance structuring, and ROI training measurement design.

If you want a practical roadmap tailored to your skills-based learning environment, let’s begin with a focused discussion around your current architecture and business priorities