Most enterprise workforce plans still move on an annual or biannual cycle. Skills are reviewed during budgeting windows, refreshed in spreadsheets, validated against role taxonomies, and then left largely untouched until the next cycle begins.

In parallel, hiring plans change quarter by quarter; internal roles evolve mid-year, and learning teams adjust priorities based on immediate demand, while the planning artifacts remain stable even as the operating reality shifts around them.

This gap rarely shows up in strategy decks, but it surfaces in slower hiring decisions, delayed redeployment, and repeated recalibration of learning investments.

The issue is often described as a data problem, but that framing is incomplete. Many organizations have skills models, libraries, and role definitions in place. What they do not consistently have is a way to see how those models age once work shifts. Decision cycles begin to outpace how skills of information is maintained, and the signals leaders rely no longer reflect current capability.

The limitation is not the existence of structure, but what that structure fails to surface when timing starts to matter.

Why Skills Frameworks Reach Their Limit in Workforce Planning

Most enterprise skills frameworks are designed to bring order to complexity. They establish a shared vocabulary, connect roles to expected capability, and support consistency across functions and geographies. In large organizations, that structure is necessary. Without it, workforce planning fragments quickly, and learning priorities lose coherence.

Frameworks tend to work best at the level of definition. They clarify which skills matter, how roles are intended to differ, and where progression should occur. In skills-based workforce planning discussions, they often anchor conversations around coverage and standardization. They help organizations agree on what capability should look like, at least in documented form.

The limitation appears once roles begin to shift in ways the framework was not built to track continuously. Work does not change in clean steps. Responsibilities blur across roles; temporary skill demand emerges through projects, and tools to reshape tasks faster than taxonomies are refreshed.

In these moments, enterprise skills mapping remains structurally sound but increasingly detached from lived work. It reflects design intent rather than current execution.

This gap becomes more visible when frameworks are used to inform decisions instead of alignment. Hiring teams search for capabilities that are formally documented but unevenly distributed. Internal mobility relies on role matches that no longer reflect actual task mix. Learning investments are mapped to defined gaps, even as those gaps shift beneath the surface. The framework holds, but its signal weakens.

Over time, the issue stops being about framework quality. The constraint is time. Planning artifacts are static by nature, while workforce movement is not. As decisions shift from alignment to execution, the limits of structure show less as inaccuracy and more as delay.

That is usually when organizations begin looking beyond frameworks, even if they do not name what they are looking for yet.

How Skills Intelligence Differs from Skills Frameworks in Practice

Skills frameworks tend to describe intent by cataloguing what the organization believes matters at a point in time. They align roles to expected capability and create a shared language across functions, which helps during planning discussions but becomes less reliable once decisions start moving faster.

In workforce conversations, frameworks often act as reference points rather than live inputs, useful for consistency, and limited for timing.

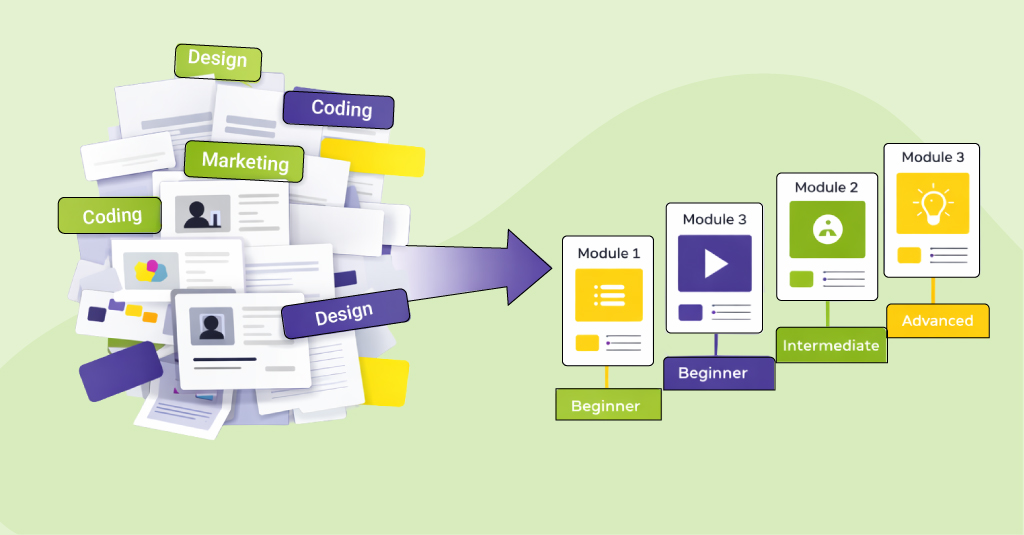

Skills intelligence operates differently, because it does not start with role definitions or taxonomies, and the emphasis shifts from classification to detection.

The focus moves toward observable data and how that data behaves across systems, including activity patterns, proficiency indicators, learning behavior, and performance inputs. Capability is treated less as something to be declared and more as something to be inferred.

In practice, this difference becomes visible in a few areas.

- Skills intelligence looks at how capability is distributed and exercised, not how it is labeled. A framework may state that a role requires advanced data analysis, but that distinction matters less once decisions are no longer annual and hiring demand shifts, project teams form and dissolve, and learning priorities change mid-cycle. A framework can confirm alignment, but it cannot signal drift.

- Workforce skills analytics treats skills as variables that change as work changes, not as fixed attributes attached to roles. The underlying data gets revisited and reworked as new inputs appear, often unevenly and not on a clean schedule. It does not replace existing learning platforms, HR systems, or talent marketplaces. Instead, it pulls fragments of signal from each of them, so hiring activity, internal movement, and development effort can be viewed together rather than interpreted in isolation.

Over time, the value shifts from documentation toward observable capability.

The next question is rarely about definition.

Once skills begin to function as data signals instead of static descriptors, gaps emerge that role alignment alone is never exposed, and the focus naturally turns to understanding what those gaps actually look like at scale.

What Workforce Data Reveals That Role-Based Views Do Not

Role-based views compress variation by design, assuming that once someone occupies a role, the expected capability is present and usable at a consistent level. Workforce data rarely supports that assumption when examined in aggregate. As skills information is pulled across teams, projects, and systems, the picture becomes less about role coverage and more about how capability actually shows in day-to-day work.

When teams begin looking beyond titles and job architecture, a different set of signals tends to surface.

- Roles appear fully staffed, yet execution slows in predictable places. Work queues build around specific tasks rather than entire roles.

- Escalations cluster around the same individuals, even when multiple people nominally hold the required skill.

- Certain capabilities show repeatedly in learning records but only sporadically in applied work, suggesting exposure without depth.

- Skills exist across the workforce but are concentrated in teams that are already operating at capacity.

- Adjacent roles carry overlapping capabilities, yet internal movement remains limited because the role labels do not signal transferability.

- Proficiency plateaus below what current work requires, even though headcount and role coverage remain unchanged.

In one organization, workforce skills analytics showed that a critical technical capability was broadly present at a foundational level, but only a small subset of employees applied it with enough depth to support complex work. Enterprise skills mapping suggested adequate coverage, while operational data pointed to a clear constraint. Because role definitions still appeared in sound, the mismatch did not trigger hiring or development action.

What gets labeled as a shortage is often misread. Capability is present, but unevenly distributed, applied shallowly, or available too late.

Capability may exist, but not where demand concentrates or at the level current work requires. Analysis alone has limits. When insight reflects an earlier operating state, its value erodes, shifting attention from interpretation to visibility and timing.

Why Workforce Decisions Fail Without Real-Time Skills Visibility

Most workforce decisions do not fail because leaders lack data. They fail because the data they rely on reflects a past state of the organization. Skills information is reviewed, validated, and discussed, but by the time it informs action, work has already shifted.

Projects start, pause, or change scope, and internal movement accelerates unevenly across teams rather than following formal planning cycles. The decision logic remains anchored to a snapshot that is no longer current.

This gap shows differently depending on the decision being made, but the underlying issue is consistent. Visibility arrives after the point where it can shape the decision.

| Decision context | What planning assumes | What is actually happening | Where timing breaks |

|---|---|---|---|

| Hiring | Required skills are scarce in the market | Capability exists internally but is unevenly applied | Internal data is outdated, so external hiring is triggered first |

| Internal Mobility | Roles signal readiness for movement | Transferable skills sit outside role boundaries | Skill signals are refreshed too slowly to support movement |

| Upskilling | Gaps are defined through annual reviews | Skill depth erodes as work complexity increases | Learning priorities lag behind operational change |

| Workforce Allocation | Capacity aligns with role coverage | Demand concentrates around specific tasks | Task-level signals are not visible in planning cycles |

What keeps these failures in place is that they do not surface as obvious breakdowns. Planning structures still appear coherent, and decisions still look defensible when viewed at one time.

The distortion sits in how timing quietly reshapes outcomes. Skills information reflects an earlier operating state, while work continues to change beneath planning assumptions.

Over time, this normalizes decision-making based on stale signals. Visibility lags become routine, and workforce insight shifts from informing action to justifying it after the fact.

Use Cases in Hiring, Mobility, and Upskilling

Once skills visibility moves closer to real time, its impact shows first in decisions that carry cost and irreversibility. Hiring, internal movement, and upskilling all depend on assumptions about current capability. When those assumptions shift from static models to observable signals, the decision logic changes in uneven but predictable ways.

- Hiring tends to be the earliest pressure point. Requisitions are often triggered by perceived absence rather than verified constraint. With clearer skills of visibility, hiring teams start seeing where capability exists but is underutilized, fragmented, or locked inside role boundaries. In some cases, external hiring slows because internal supply becomes visible earlier. In others, roles are reframed because the original requirement reflected outdated task composition rather than current work. The decision does not become easier, but it becomes more precise.

- Internal mobility behaves differently. Movement rarely fails because skills are missing. It fails because readiness is inferred from titles rather than evidence. When skills data is surfaced across adjacent roles and projects, transferability becomes easier to assess without waiting for formal role changes. Movement starts to follow capability patterns instead of organizational charts. This does not eliminate friction, but it changes where friction sits, away from eligibility debates and toward capacity planning.

- Upskilling decisions tend to lag both hiring and mobility because they are often tied to review cycles. With stronger skills and intelligence, development priorities shift from broad coverage to targeted depth. Learning investments move closer to actual execution gaps rather than anticipated ones. Programs become narrower, sometimes shorter, and occasionally unnecessary. The signal is not that learning matters less, but that it has to respond to change faster than planning cycles allow.

Across all three areas, the common shift is not strategic. It is operational. Decisions rely less on declared readiness and more on observed capability.

Over time, that reorients workforce planning away from static alignment and toward continuous adjustment, which is uncomfortable, but increasingly difficult to avoid.

How Upside Learning Supports Skills Intelligence in Practice

Skills intelligence changes what learning systems are expected to respond to. As capability signals become more current and granular, learning design has to move closer to how decisions are actually made, rather than following static frameworks or annual plans.

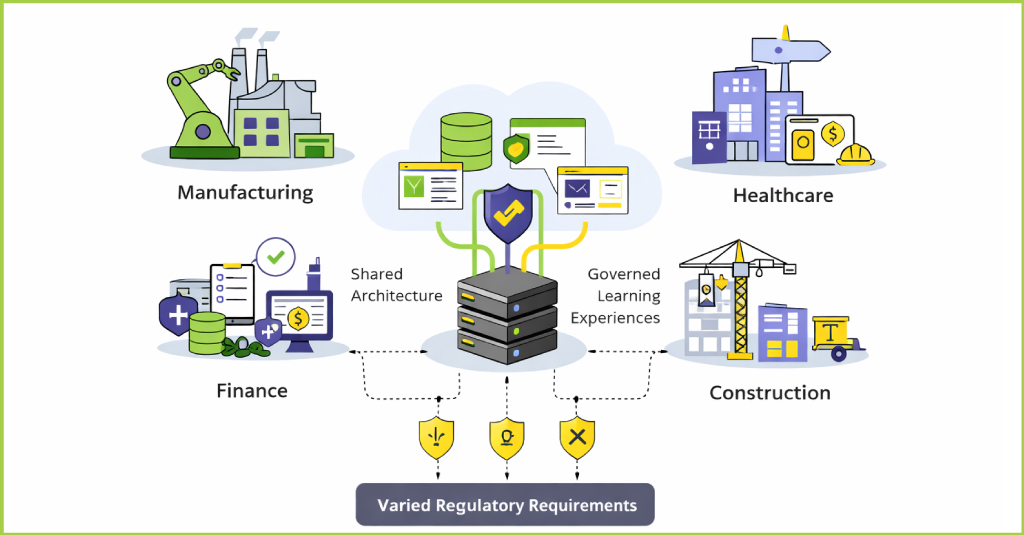

Upside Learning fits into this shift at the execution layer.

- Learning interventions are designed around observed capability gaps, not assumed role requirements.

- Programs are structured to adjust as skill signals change, rather than locking content to fixed curricula.

- Learning architecture is aligned with workforce data inputs, so hiring, mobility, and upskilling decisions can be supported without waiting for formal role updates.

The role here is not to define skills of intelligence or manage the data layer, but to ensure learning systems can respond to it in practice. This involves aligning learning design, structure, and governance with shifting capability signals, so learning can adjust as work changes rather than waiting on formal role updates or fixed planning cycles.

As organizations move toward more continuous skills-based workforce planning, this execution layer becomes an underlying dependency that enables decisions without drawing attention to itself.

Learn more about Upside Learning and our custom skills-based learning solutions for skills-based workforce planning initiatives.

Frequently Asked Questions

Employees forget training content quickly because most programs overload working memory. When too much information is delivered at once, the brain discards most of it.

By focusing on one objective at a time and revising it in short sessions. This helps learners understand the content better and use it in their work.

Cognitive Load Theory is a learning science framework that explains how memory limits affect learning. And how training should be designed to improve retention and application.

Yes. When designed correctly, microlearning improves accuracy, recall, and compliance by reducing cognitive overload. For a deeper perspective on how microlearning works in real business environments and compliance contexts, explore our eBook, “Microlearning: It’s Not What You Think It Is.”

Typically, between 3 to 7 minutes, depending on the complexity of the objective being addressed.

Pick Smart, Train Better

Picking off-the-shelf or custom eLearning? Don’t stress. It’s really about your team, your goals, and the impact you want. Quick wins? Off-the-shelf has you covered. Role-specific skills or behavior change? Custom eLearning is your move.