Assessments are a key component of eLearning and are variously seen as the barometer of the elusive and much debated, “ROI of learning”. More often than not though, it’s seen as evidence of how good the elearning is, proof of learner completion and of course, the BIG one; evidence of compliance – “they all got the required 80% pass mark required!” It is also a source of great comfort that we have tangible and irrefutable proof that our learners have learnt!

We have, over the years, developed some very clever forms of assessments, with a plethora of question types delivered randomised to keep our learners on their toes! But nearly all assessments are based on the one key metric “numbers”! Numbers of people who passed (or not), numbers of people who are ‘compliant’ (or not), numbers of people who completed the course (or not) etc. but does this actually mean they learnt? Is it so obviously linked to improved knowledge and skills? Are these people with the right “numbers” better at their jobs? Is the organisation benefitting from these “numbers”?

Are we assessing the right aspects of learning? It’s an old argument but are we testing ‘knowledge retention’ and not understanding? And are we just testing knowledge but not building it? We seem to be happy to test what they know and ignore what they don’t……true?

It’s possibly a bit of a generalisation when I say that all assessments are ruled by ‘numbers’ and even where a ‘pass’ mark is not necessary, we tend to use the numbers to gauge the levels of knowledge and awareness within the organisation and use this as evidence that “training is working”.

But to me the bigger issue is what does the achievement of 80% (or any other number) actually mean? Does it mean they know enough to satisfy the inspector or whoever else comes knocking? Does it mean their level of knowledge is what they need to do their jobs? Well? That they know 80% of what they are required to? And critically do the learners themselves feel satisfied that they ‘know their stuff?’

Consider this from what they don’t know? The 20%. Especially in the case of compliance, are we, the companies, the authorities, the learners themselves comfortable with the fact that they DON’T know 20%? Are we willing to accept the risk off 20% less knowledge because they have proved they know 80%? Would we be happy that our doctor knew 80% of what he was talking about? Or the pilot of a plane we might be on? Our accountants, managers anyone and everyone else who is walking around, secure in the knowledge that they know ‘enough’.

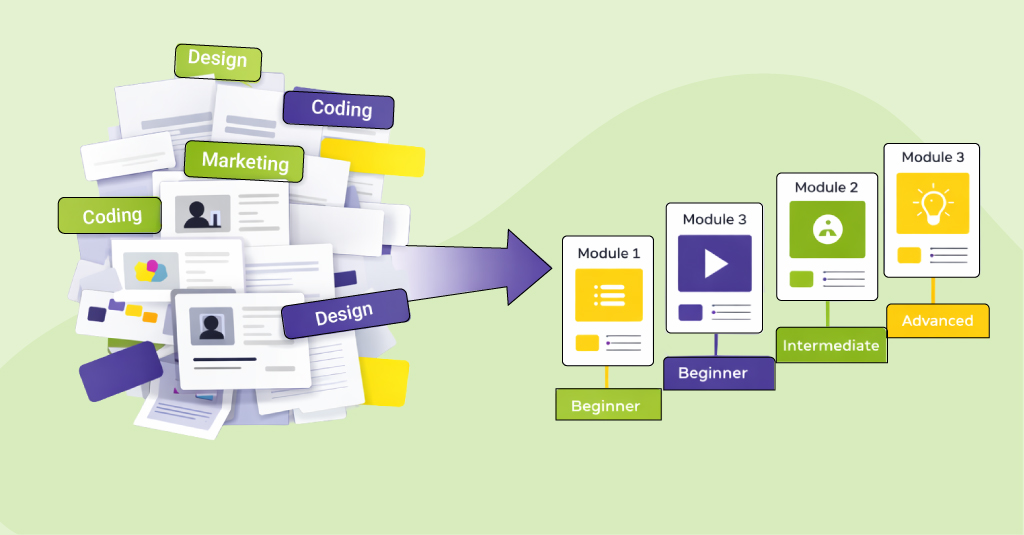

Now I am not suggesting that we can all and must know 100% – we can’t. But what I am saying is using assessments to identify and target what we don’t know! Let’s design training that addresses the gaps in our knowledge and that supports and builds competency rather than solutions that are based on compromise?

As learners we must look for the missing 20% – what is it that we should know but don’t? And where and how can we get hold of it? As organisations, let’s recognise, manage and mitigate the risk this 20% presents. And as learning professionals, let us design interventions that contribute to this journey of ‘fill in the blanks’ and assess for learning, not of the learning.

Upcoming Webinar

eLearning on Tablets – What, Why & How? | 23rd Jan, 1:00 PM – 2:00 AM AEDT.

Upcoming Events

Learning Technologies 2014 | Jan 29-30 | Olympia 2, London | Stand 112

LEARNTEC 2014 | Feb 4-6 | Karlsruhe, Germany | Stand No. B-12